Little panic in prod!

It won’t surprise anyone that I manage Kubernetes clusters in production (and also for fun. Yes I’m weird). And sometimes, when you have slightly unusual workloads, you get some funny surprises.

To give you some context, I have an “on-prem” cluster of about sixty physical baremetal nodes, on which I run (among other things) very short ephemeral jobs.

This generates a lot of traffic on the etcd database (lots of pod creation/destruction events) and it has already caused us problems in the past (especially for metrics collection - we’ve crashed Prometheus even with lots of RAM).

But it was holding up.

And then, disaster strikes…

Recently, I also started adding lots of new “policies” on Kyverno (for those who don’t know, I wrote an article on the subject in french).

And I discovered somewhat to my detriment that Kyverno adds A LOT of pressure on etcd, by creating tons of events (policy evaluation for each Kubernetes object, and since I have many…). You can tweak that but that’s not the point of the article.

To such an extent that at some point, all write operations on the API server were returning the following error:

Error: etcdserver: mvcc: database space exceeded

Smells like a full DB 😬😬😬. Yet, no alerts on my disk spaces!? Weird.

Anyway, to make matters worse, since the etcd pods were no longer healthy because the database was supposedly full, the API server crashed after a while and I had no control over anything to debug.

PANIC!

Getting things back on track

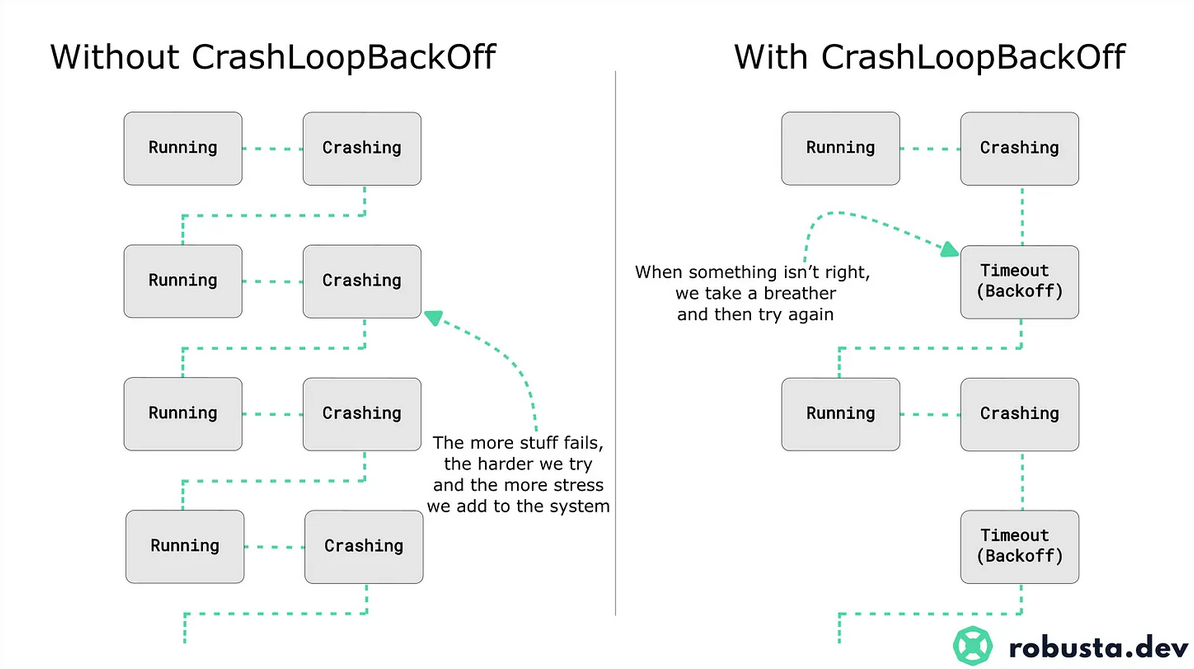

Little “pro tip” when your Kubernetes control plane is in trouble - at some point nothing works anymore because the etcd/apiserver pods go into crashloop backoff, being alternately down and not restarted because of the “backoff”.

A good way to work around this, when you have control over the control plane (so, not on managed services, because in that case, it’s not your problem), is to restart the kubelet on all control plane nodes at the same time.

Why? Because restarting the kubelet resets all the counters so your pods aren’t in the “backoff” phase for too long, which avoids issues like “not enough etcd members are on, so I self-sabotage” and so on.

Diagram source: Natan Yellin’s Substack for robusta.dev

Cleaning up the etcd database

Now that’s done, I had enough living etcd pods (even if not operational) for a short time.

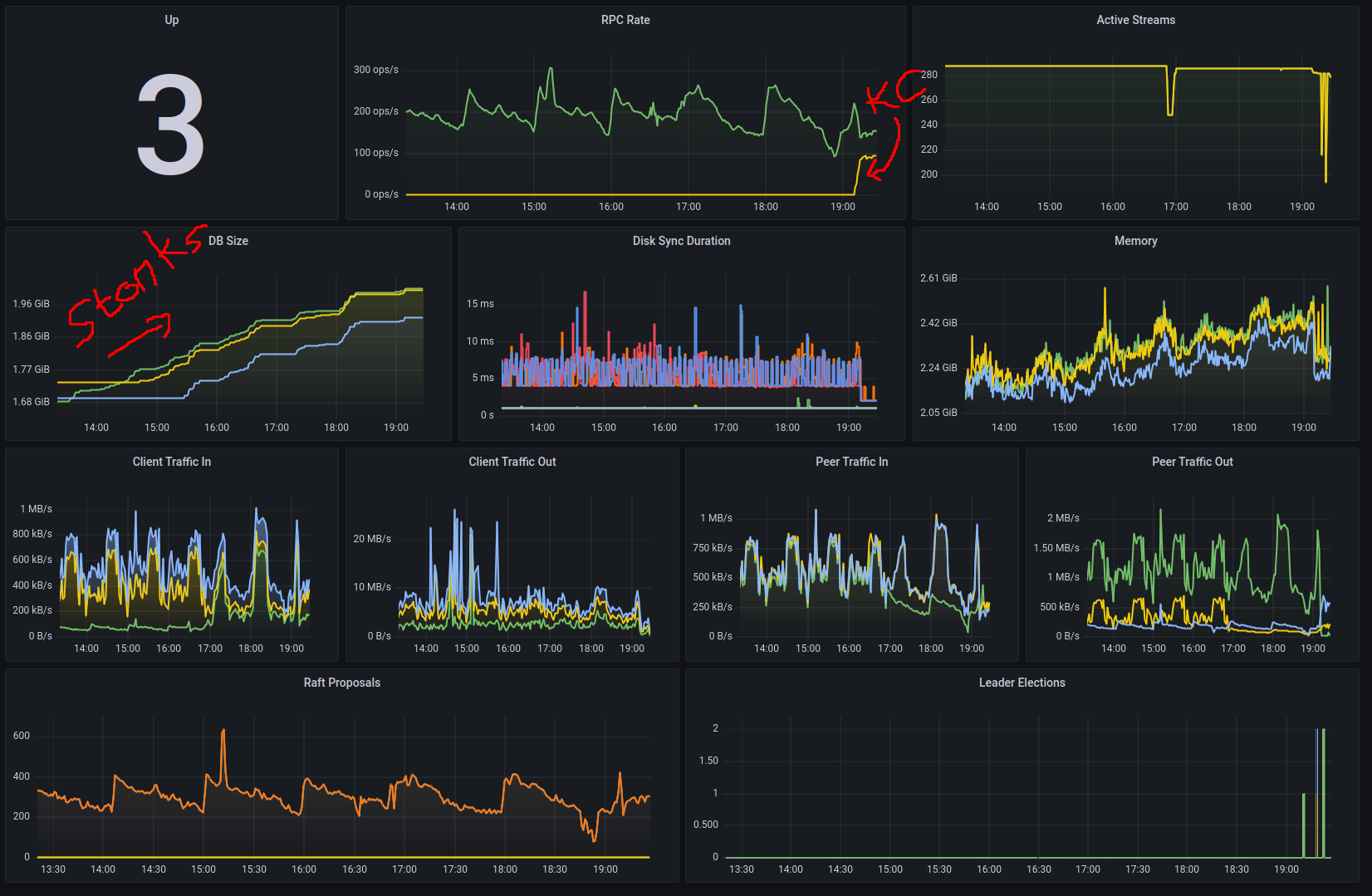

A quick look at my Grafana graphs shows that something indeed “happened” when I activated the new Kyverno policies.

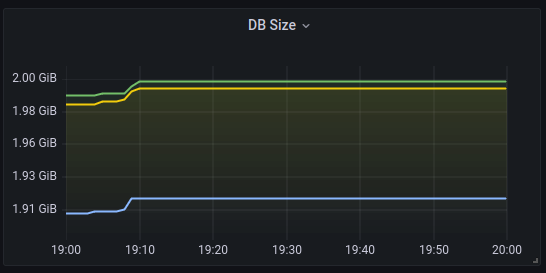

And “BY SHEER COINCIDENCE” (or is it?), it stops working when the size of 2 out of 3 etcd databases reaches 2 GB

A quick Internet search confirms that my database is full, and that the solution to my problem is to compact then defragment my database.

Since everything is a bit broken, you can find bash commands to send to get the latest revision, compact then defragment the database:

# get latest revision

REVISION=$(ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key endpoint status --write-out="json" | egrep -o '"revision":[0-9]*' | egrep -o '[0-9].*')

# compact

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key compact ${REVISION}

# defrag

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key defrag

# SUPER MEGA IMPORTANT

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key alarm disarm

I added a SUPER MEGA IMPORTANT comment on the last instruction because, since I’m a bit silly, I didn’t initially run it.

Well yeah. The idea of disarming an alarm seemed completely incongruous to me. The alarm, I want to keep it. Right?

But actually, this is the normal behavior. The etcd cluster is designed to block itself, and do nothing more, as long as the alarm hasn’t been disarmed, even if the space issue is resolved…

Ok, I’ll know for next time

What does “mvcc: database space exceeded” mean and how do I fix it? The multi-version concurrency control data model in etcd keeps an exact history of the keyspace. Without periodically compacting this history (e.g., by setting –auto-compaction), etcd will eventually exhaust its storage space. If etcd runs low on storage space, it raises a space quota alarm to protect the cluster from further writes. So long as the alarm is raised, etcd responds to write requests with the error mvcc: database space exceeded.

To recover from the low space quota alarm:

- Compact etcd’s history.

- Defragment every etcd endpoint. - Disarm the alarm.

memberID:11111111111111111111111 alarm:NOSPACE

Note: since etcd wasn’t coming back to life, I ran the compaction command 50-ish more times and it was returning an error that made me think there was another problem. But no, it’s just that the compaction was done correctly ;-).

error: code = OutOfRange desc = etcdserver: mvcc: required revision has been compacted"}

Error: etcdserver: mvcc: required revision has been compacted

Preventing it from happening again

OK, now that the DB is restored, how do we make sure this doesn’t happen again?

In reality, the default parameters of kubeadm’s (local) etcd database are not great.

First, we see that I hit the 2 GB limit, the default limit in etcd, even though I only have 60-70 nodes and a few thousand pods (ok, I create hundreds of thousands per day, but still).

Rancher’s documentation recommends raising this limit to 5 GB (Tuning etcd for Large Installations).

So we’re going to modify the quota-backend-bytes value.

Next, we need to enable automatic compaction for our etcd. Since I use kubeadm and a kubeadmcfg file, I need to add a section in my file to tune the parameters:

etcd:

local:

extraArgs:

quota-backend-bytes: "5368709120"

auto-compaction-retention: "1"

auto-compaction-mode: periodic

Then regenerate the manifests on all my control plane nodes (unfortunately, this doesn’t happen automatically, cf official Kubernetes documentation - Reflecting ClusterConfiguration changes on control plane nodes)

kubeadm init phase etcd local --config kubeadmcfg.yaml

Note: be careful, the expected arguments are strings, so make sure to surround all numeric values with quotes:

json: cannot unmarshal number into Go struct field LocalEtcd.etcd.local.extraArgs of type string

Last point, while it’s possible to tell etcd to perform compaction all by itself, apparently defrag is not automatic.

Most of the time this might not be a problem, but since I’m extremely aggressive with pod creation/destruction + Kyverno policy reports, I also had to automate this “defrag” part.

Until I find something better, I’ve added a cron job:

00 */3 * * * /usr/bin/etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints https://@IP:2379 defrag --cluster

Well, it wasn’t the most fun operation of the year, but now it works again 😂.

Additional sources

- etcd blog - How to debug large db size issue?

- etcd documentation - History compaction: v3 API Key-Value Database

- Kubernetes Community forums - Etcdserver: mvcc: database space exceeded

- grosser.it - ETCD db size based compaction

- gist grosser.it - script for automatic compaction/defrag (a bit aggressive)

- Platform9 KB - Cluster Operations Fail With Error - etcdserver: mvcc: database space exceeded