A slightly crazy documentary project

When I started writing my book “Kubernetes: 50 solutions for development workstations and production clusters”, I quickly realized a problem: there are an infinite number of ways to deploy Kubernetes.

Well, not literally infinite, but still… many. Too many?

To structure my book and choose which solutions I would cover, I did what any good nerd would do: I created a spreadsheet.

A very large spreadsheet.

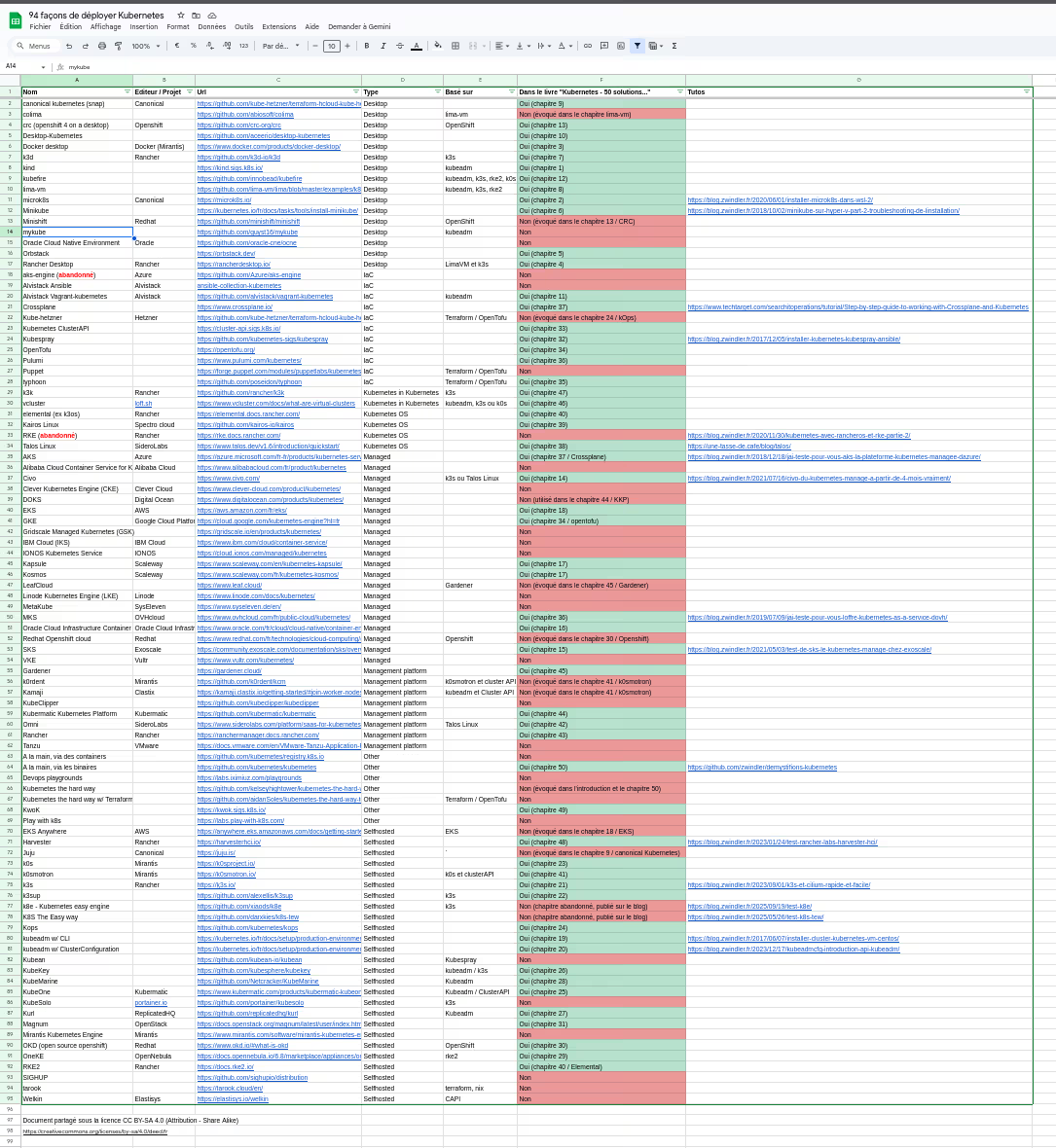

A Google Sheet that currently lists 93 different methods for deploying Kubernetes. Since I don’t like to “waste”, I’m sharing it with you today under CC BY-SA 4.0 (Attribution - Share Alike):

What does this spreadsheet contain?

The spreadsheet is structured with several columns to help you navigate this jungle:

- Product name (and publisher when interesting)

- Product URL: I tried to restrict to open source products (or public managed services) although there are a few exceptions

- Solution type (I’ll come back to this)

- “Based on”: this is quite funny… many projects are layers on top of kubeadm, k3s or k0s. Not all of them say it openly, and I realized it by trying them or digging in the code

And maybe a bit less interesting for some of you (maybe it will disappear)

- Do I talk about it in my book?

- Do I talk about it on my blog?

The different tool categories

To structure first my thinking, and then my book, I tried to classify these methods into categories.

Some are quite obvious (a managed offering, you can immediately see what it’s about), others, a bit more personal (and therefore debatable).

Kubernetes on desktop (Local Development)

Tools for developing locally on your machine. We’re talking about Minikube, kind and other Docker Desktop

Infrastructure as Code (IaC)

Tools that allow you to describe Kubernetes deployment via code (opentofu, crossplane, pulumi…)

Kubernetes in Kubernetes

Because why make it simple when you can make it… recursive? 🤯. It’s currently limited to vCluster and k3k.

Specialized OSes

Operating systems designed specifically to run Kubernetes. I’m obviously thinking of Talos Linux, but not only ;-P.

Managed Kubernetes (turnkey cloud offerings)

No need to draw a picture, we immediately think of the EKS / AKS / GKE triplet, but also French solutions (OVHcloud Managed Kubernetes, soon Clever Cloud :smirk:)

Cluster management platforms

Here, it’s a somewhat separate category, which will allow us to manage many Kubernetes clusters and even generate new clusters managed by clusters… Often good Rube Goldberg machines like Gardener or worse Kubermatic Kubernetes Platform. We still have some slightly more fun things like Kamaji.

Automation tools for self-hosted

Solutions that automate deployment on your own machines, like kubeadm, k3s and k0s (the triplet, basis of about 50% of other market solutions).

The revelation: everyone copies from their neighbor

While filling in this spreadsheet, I discovered something funny: a large majority of tools don’t reinvent the wheel.

Many projects are actually layers or wrappers around three basic solutions I just mentioned (kubeadm, k3s and k0s). And sometimes, we also have layers on slightly more confidential solutions.

The information isn’t always available, I sometimes discovered it in a blog post, or even by digging into the solution’s internals.

This is typically the kind of interesting column to really realize that beyond the apparent diversity of these deployment solutions, there’s actually a big standard and a few variations.

I hope to manage to find more similar clues to fill this column even more :).

A living document

This spreadsheet is not static. The number of tools evolves regularly (I discover new ones almost every week).

If you know a method that’s not listed, don’t hesitate to comment on social networks.

Note: I remind you that I primarily target open source solutions or public managed services (I just removed Mirantis Kubernetes Engine for this reason).

So, what do I still have to test?

I’ve already teased on reputable social networks, in the list of things I haven’t tested yet but that could motivate me, there are: