Introduction

Today, I’m going to talk about a new Kubernetes 1.34 feature that went beta: Pod-level resources.

And to illustrate this new feature, I’ll use the example of a pod for which I want to have Guaranteed QoS.

For a while, I was convinced (but I don’t know why… because the doc is clear 🤔) that to get Guaranteed QoS, all containers in the pod had to have limits=requests, but the same for all containers (which is false). And that’s super annoying because sometimes you have a big main app and a tiny sidecar and it doesn’t make sense to give them the same values.

If we reread the doc, what matters for Guaranteed QoS (official doc) is that:

- Each container must have

cpu.limit = cpu.request - Each container must have

memory.limit = memory.request

But the values can be different between containers!

To convince yourself, just test this manifest:

apiVersion: v1

kind: Pod

metadata:

name: ctrlevel-demo1

namespace: pod-resources-example

spec:

containers:

- name: ctrlevel-demo1-ctr-1

image: nginx

resources:

limits:

cpu: "0.8"

memory: "100Mi"

requests:

cpu: "0.8"

memory: "100Mi"

- name: ctrlevel-demo1-ctr-2

image: fedora

resources:

limits:

cpu: "0.2"

memory: "200Mi"

requests:

cpu: "0.2"

memory: "200Mi"

command:

- sleep

- inf

And guess what? QoS Class: Guaranteed.

The first container has 0.8 CPU / 100Mi, the second has 0.2 CPU / 200Mi, and it’s no problem as long as each container individually respects limit=request for cpu and ram.

Well. Now that I’ve publicly admitted my mistake (shame shame shame), we’re still going to talk about Pod-level resources, because this feature remains useful.

So, what are Pod-level resources good for?

In some cases, containers (init, sidecar, …) can be very lightweight consumers. Some are also injected on the fly (service mesh, auto instrumentation) in a generalized way. Explicitly specifying limits / requests for these “small” ancillary containers can be tedious, time-consuming, difficult to tune if there are many…

Typical example: we inject a sidecar that just exposes Prometheus metrics. It consumes 10m of CPU and 20Mi of RAM. You could write:

containers:

- name: my-app

resources:

limits:

cpu: "1"

memory: "100Mi"

requests:

cpu: "1"

memory: "100Mi"

- name: metrics-exporter

resources:

limits:

cpu: "10m"

memory: "20Mi"

requests:

cpu: "10m"

memory: "20Mi"

But frankly, it’s a pain. And if tomorrow the exporter needs a bit more memory in some cases but not all, you have to modify the manifest to increase it everywhere, or handle the exception…

With Pod-level resources, you can do:

#file podlevel-demo1.yaml

apiVersion: v1

kind: Pod

metadata:

name: podlevel-demo1

namespace: pod-resources-example

spec:

resources:

limits:

cpu: "1"

memory: 100Mi

requests:

cpu: "1"

memory: 100Mi

initContainers:

- name: sidecar-test

image: busybox:latest

command: ["sh", "-c", "while true; do sleep 3600; done"]

restartPolicy: Always

containers:

- name: podlevel-demo1-ctr

image: vish/stress

args:

- -cpus

- "2"

Here:

- The

resourcesare declared at the Podspeclevel - The containers themselves DO NOT have resource declarations

- The sidecar (declared as an initContainer with

restartPolicy: Always, see my previous article on sidecars) shares the Pod’s resources with the container.

In this example, it’s one container and one sidecar, but it could very well have been any other mix of containers, classic init containers, and sidecars.

Let’s test it!

kubectl create namespace pod-resources-example

kubectl apply -f podlevel-demo1.yaml

Now, let’s verify that we got Guaranteed QoS with this oneliner from hell 😈:

kubectl get pod podlevel-demo1 -n pod-resources-example -o jsonpath=$'Pod-level resources:\n Requests: {.spec.resources.requests}\n Limits: {.spec.resources.limits}\n\nContainer podlevel-demo1-ctr:\n Requests: {.spec.containers[0].resources.requests}\n Limits: {.spec.containers[0].resources.limits}\n\nSidecar sidecar-test:\n Requests: {.spec.initContainers[0].resources.requests}\n Limits: {.spec.initContainers[0].resources.limits}\n\nQoS Class: {.status.qosClass}\n'

Result:

Pod-level resources:

Requests: {"cpu":"1","memory":"100Mi"}

Limits: {"cpu":"1","memory":"100Mi"}

Container podlevel-demo1-ctr:

Requests:

Limits:

Sidecar sidecar-test:

Requests:

Limits:

QoS Class: Guaranteed

The individual containers DO NOT have declared resources, but the Pod as a whole has resources. And we still get Guaranteed QoS!

Everything works as expected.

You can also mix pod level and container level

Beyond this example, know that it’s possible to mix container level (classic) with pod level.

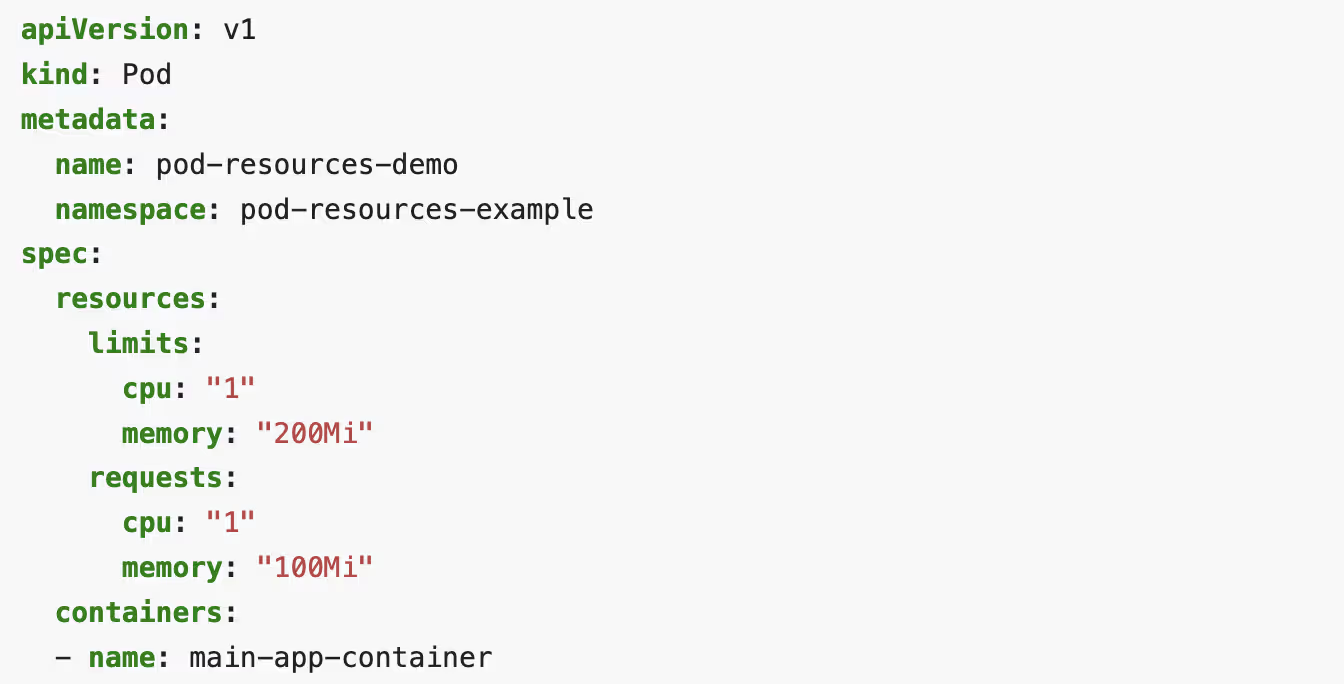

Here’s an example inspired by the official documentation:

#file podlevel-demo2.yaml

apiVersion: v1

kind: Pod

metadata:

name: podlevel-demo2

namespace: pod-resources-example

spec:

resources:

limits:

cpu: "1"

memory: "200Mi"

requests:

cpu: "1"

memory: "200Mi"

containers:

- name: podlevel-demo2-ctr-1

image: nginx

resources:

limits:

cpu: "0.5"

memory: "100Mi"

requests:

cpu: "0.5"

memory: "100Mi"

- name: podlevel-demo2-ctr-2

image: fedora

command:

- sleep

- inf

Here, the podlevel-demo2-ctr-1 container specifies its resources, but we also specify resources for the entire pod, and not at all in podlevel-demo2-ctr-2.

For info, this pod’s example is also Guaranteed, despite the absence of limits/requests on podlevel-demo2-ctr-2

kubectl get pod podlevel-demo2 -n pod-resources-example -o jsonpath=$'Pod-level resources:\n Requests: {.spec.resources.requests}\n Limits: {.spec.resources.limits}\n\nContainer podlevel-demo2-ctr-1:\n Requests: {.spec.containers[0].resources.requests}\n Limits: {.spec.containers[0].resources.limits}\n\nContainer podlevel-demo2-ctr-2:\n Requests: {.spec.containers[1].resources.requests}\n Limits: {.spec.containers[1].resources.limits}\n\nQoS Class: {.status.qosClass}\n'

Result:

Pod-level resources:

Requests: {"cpu":"1","memory":"200Mi"}

Limits: {"cpu":"1","memory":"200Mi"}

Container podlevel-demo2-ctr-1:

Requests: {"cpu":"500m","memory":"100Mi"}

Limits: {"cpu":"500m","memory":"100Mi"}

Container podlevel-demo2-ctr-2:

Requests:

Limits:

QoS Class: Guaranteed

Conclusion

Pod-level resources are a feature that simplifies life in certain use cases, especially when using lightweight sidecars or containers injected on the fly.

Is it revolutionary? No. Will it change your life? Probably not. But it can always be useful.

We learn something new every day. Even (especially?) when we mess up. 😌